Peergos - your private online space

Peergos is building the next web - the private web, where end users are in control. Imagine web apps being secure by default and unable to track you. Imagine being able to control exactly what personal data each web app can see. Imagine never having to log in to an app ever again. You own your data and decide where it is stored and who can see it. At Peergos, we believe that privacy is a fundamental human right and we want to make it easy for everyone to interact online in ways that respect this right.

The foundation of Peergos is a peer-to-peer encrypted global filesystem with fine-grained access control designed to be resistant to surveillance of data content or friendship graphs. It will have a secure messenger, with optional interoperability with email, and a totally private and secure social network, where users are in control of who sees what (executed cryptographically). Our motto at Peergos is, "Control your data, control your destiny."

The name Peergos comes from the Greek word Πύργος (Pyrgos), which means stronghold or tower, but phonetically spelt with the nice connection to being peer-to-peer. It is pronounced peer-goss, as in gossip.

For a less technical introduction see peergos.org, or to dive right into the source code, see github.

WARNING: Peergos has had an audit by Cure53, but is still in active development. Some of the features in this documentation are yet to be implemented, in particular, onion routing is not used yet.

Aims

- Securely and privately store files in a peer to peer network which has no central node and is generally difficult to disrupt or surveil

- Secure sharing of such files with other users of the network without visible meta-data (who shares with who)

- Trust free servers and storage. Clients do not need to trust their server or storage. Data, metadata, and contact lists are never exposed to your server.

- Beautiful user interface that any computer or mobile user can understand

- Secure messaging, with optional interop with actual email

- Independent of the central SSL CA trust architecture, and the domain name system

- Self hostable - A user should be able to easily run Peergos on a machine in their home and get their own Peergos storage space, and social communication platform from it

- Allow users to run web apps within Peergos (and served directly from Peergos) which are totally sandboxed and unable to track users or exfiltrate data

- Secure web interface as well as desktop clients, native folder sync, and a command line interface

- Do not rely on any cryptocurrency

- Enable users to collaborate, editing a document in place concurrently

- Secure real time chat, and video conferencing able to handle 100s of participants, fully end-to-end encrypted

- Social account recovery - designate N of M friends who can collaborate to recover your account if you lose your password

- Optional use of U2F for securing login

Features

- Self hosting

- Peer-to-peer

- Multi-device login

- Web interface

- Social

- Sharing

- Large files

- Streaming

- Fast seeking

- Resumable uploads

- File viewers

- Secret links

- Migration

- Folder Sync

- Open source

- Custom Apps

- Private Websites

Self hosting

Peergos is fully self hostable. You can run peergos from your own home or server to obtain as much storage and bandwidth as you need, whilst still transparently interacting with anyone using any other server. Because the server only ever sees encrypted data you can also tell it to directly store your data in a standard cloud storage provider like Backblaze or Amazon without any loss of privacy.

Peer-to-peer

Peergos is built with a peer-to-peer architecture to protect against censorship and surveillance and to improve resiliency. There is no central surveillance point that an attacker could monitor all file transfers through. There is also no central dns name or TLS certificate authority that could be used to attack the network.

Trust free servers

Peergos is designed so that clients do no need to trust the Peergos server they are talking to. Hashes and signatures are all verified client side for reads and writes. Data can also be mirrored to transparently provide redundancy.

Multi-device login

Peergos is naturally mutli-device. You can log in to your account from any device, and through any Peergos server. It is not tied to any other data like your phone number or email address. All you need is your username and your password. Any modern browser will suffice, including mobile.

Web interface

In keeping with our aim to be as convenient to use as existing centralised services, Peergos has a web interface which can be used instead of a native application. This interface does not require any special knowledge, especially not of cryptography or keys, but should none-the-less encourage/enforce safe practices. The web interface does not load any code from third-party servers and is entirely self hosted. You even load it directly from ipfs and log in!

The web interface can be accessed from a public server over https or from your machine if you run Peergos locally.

Social

Peergos users can send follow requests to each other. If accepted, the other user can then share files or send messages with you. Following is a one-way mechanism: If Agata follows Bartek then Bartek can share files with Agata. If Bartek is also following Agata, then she can also share files with Bartek. Following can be revoked by either user at any point.

Your friend list is kept encrypted in your own Peergos space, hidden from other users and the server.

There is also a secret link mechanism for sharing files with people who do not have peergos accounts.

Sharing

A file or folder can be shared with any user who is following you. This access can be read only or writable. Access can be revoked at any time whilst maintaining access to anyone else the item is shared with. This is all achieved cryptographically with capabilities and lazy re-encryption.

Large files

There is no file size limit in Peergos apart from what will fit in your storage quota. Despite doing client side encryption/decryption we can still upload or download arbitrarily large files sending directly from/to the filesystem.

Streaming

Peergos is naturally streaming and despite having to decrypt files in the client we can still stream large files directly with low ram usage. This allows us to stream large videos in the browser directly to a html5 video element.

Fast seeking

Seeking forward or backward in huge files is both very fast (O(1) in I/O requests) and fully authenticated. When you start to retrieve a file from a capability you first retrieve the cryptree node (encrypted metadata) of the first chunk, which is located at the given champ key (32 random bytes).

In the FileProperties there is a stream secret (32 random bytes). To find the next chunk you calculate sha256(stream secret + current champ key). This means to skip forward X bytes you repeat this, doing X/5MiB sha256 hashes locally. Then finally do a champ lookup at the end to retrieve the chunk you asked for. To seek backward you just keep a reference to the start and seek forwards.

This seeking is both very fast, and doesn't leak to the server which chunks are part of the same file.

Resumable Uploads

It can be a pain if you're uploading a huge file to some service and then your internet cuts out half way and you need to start again. Peergos avoids this by implementing resumable uploads. If you try and upload a file that failed halfway for whatever reason, then it will ask you if you want to continue the upload. This even works if you're uploading from a different device!

We achieve this by storing an upload transaction file with details of the upload in your peergos space during the upload.

File viewers

There are several built-in file viewers in Peergos. We have viewers for the following file types:

- images

- videos

- audio

- binary (hex viewer)

We have editors for the following formats

- text

- markdown

- code

We support the following languages in the code editor:

- c

- c++

- Clojure

- css

- diff

- Go

- html

- Java

- Javascript

- Kotlin

- python

- Ruby

- Rust

- Scala

- shell

- tex

- xml

- yaml

Secret links

A secret link can be generated to point to any file or folder. Anyone with a (Javascript enabled) web browser can view such a link. This is a capability based link which includes the necessary key in the hash fragment of the url. A secret link doesn't expose the file to the network, or indeed to anyone who doesn't have the link itself because the key material isn't sent to the server.

An example of a secret link to a folder is:

Migration

Your identity in Peergos is not tied to any particular server. Compared to other federated social networks where moving server typically involves losing your social network and meta-data, if not data too, Peergos allows you to transparently migrate between servers and storage providers without any action required from your friends and without any data loss.

This means, for example, you could start out by creating an account on your device which gives you limited storage and uptime, then effortlessly migrate to a paid server, or to your own server, when you realise how awesome Peergos is.

File Sync

Peergos has the ability to do standard directory syncing and transparently mount a folder to your host operating system. This is achieved with a FUSE binding (or equivalent for Windows and MacOS).

Open source

Peergos is fully open-source, both clients, and server (incuding the web-interface). The main interface is a web-ui, but Peergos can also be accessed using a Java client on the command line, or with a FUSE mount of your Peergos filesystem.

No part of our infrastructure, apart from TLS and Peergos private keys, are secret. We also have reproducible builds (we don't use npm or browserify etc.) We also vendor all dependencies so any historic git commit should be buildable without any external data.

Eventually we want to self host our git repos in Peergos itself.

Custom Apps

Peergos Apps are a way to extend the Peergos platform to add custom functionality

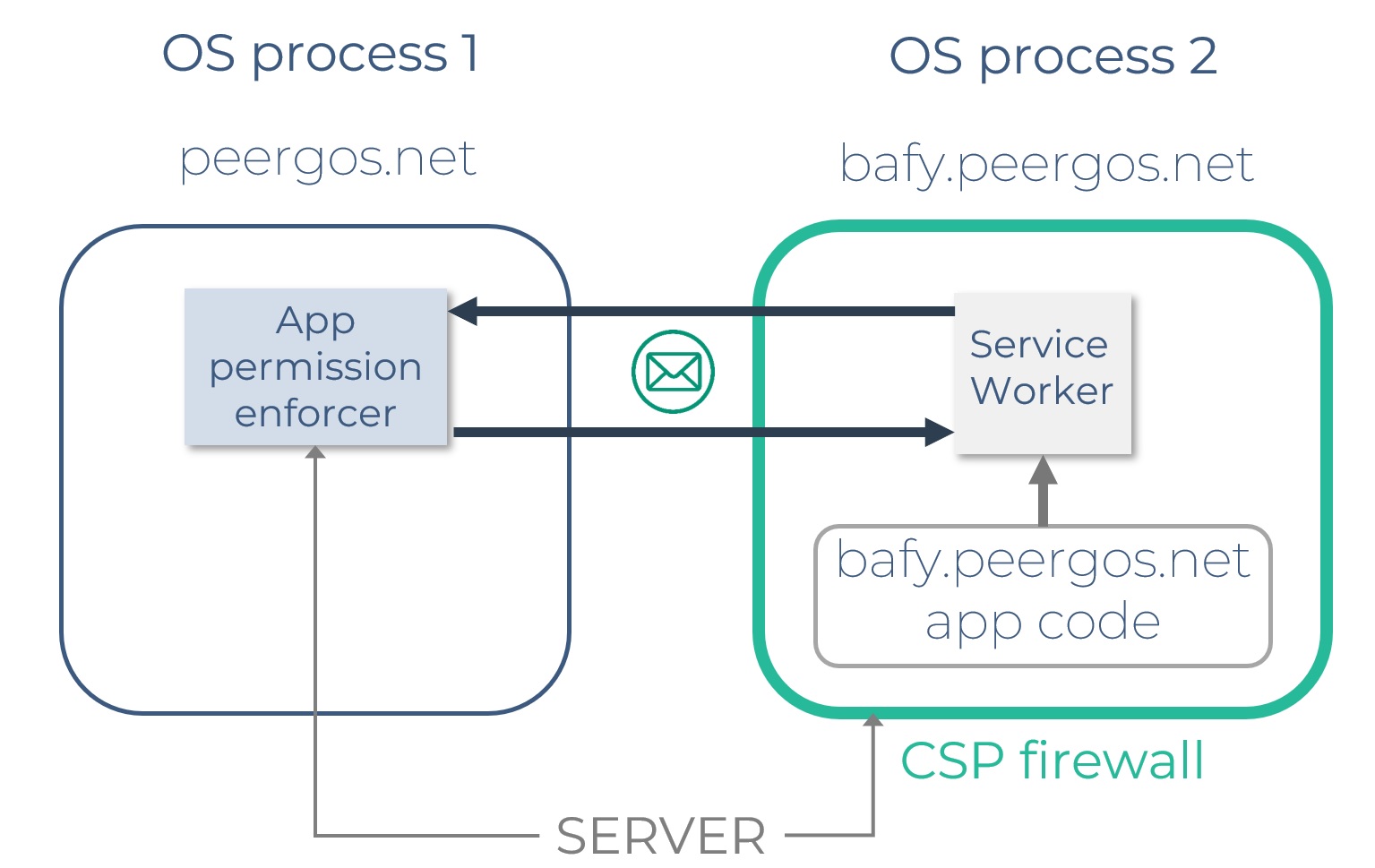

When an app is run, its HTML5 assets are rendered in a unique hostname (sha256(app path).$peergos-domain) of the peergos server, e.g. https://bciqjmdntozhuanb2c3ka5vtqpux75j5symbyomhkpnilndngl6iaspy.peergos.net. The app domain is isolated from the main peergos domain in a separate OS process, and from other apps. The app domain is also locked down with CSP http headers so it cannot make any external requests which could be used to exfilrate data [0]. Requests made by the app are intercepted in a service worker and translated to post messages which are sent to the main peergos tab. That is where the requests are checked for validity and permissions are enforced. By default, an app has no permissions and can only read its own assets. Running an app also doesn't reveal its assets to the server - they are served via a service worker and post messages to the main peergos tab, and thus benefit from all the existing privacy protections in Peergos.

[0] This is currently not true until browsers implement webrtc CSP which blocks any webrtc connections. Browser issues for this are firefox, Chrome. So only install apps from authors you trust for now, unless they don't require any permissions which is safe.

Use cases:

-

Media Player App. The App should appear as a context menu item when a media file is selected on the Drive screen.

-

Word Processor App. As well as having read access to a document file, the App should be able to overwrite the contents of the document file.

-

Image Gallery App. The App should be able to read image files from the selected Folder tree.

-

White Board App. App will appear on the Launcher page. App can create, retrieve, update, append and delete files within it’s own App space.

Example apps

You can find some example apps here: https://github.com/Peergos/example-apps

Anatomy of a Peergos App

An app consists of plain HTML/Javascript/CSS packaged in a folder

The App is described by a mandatory manifest file called peergos-app.json

App Folder Structure

asssets - Must contain index.html as an entry point

data - Files under the control of the App

peergos-app.json - manifest file

Peergos-app.json

This file describes the App. It also indicates the permissions required for the App to function

Fields:

schemaVersion - Currently always set to 1

displayName - Used for display. Limited to 25 characters. (alphanumeric plus dash and underscore).

version - Format of Major.Minor.Patch-Suffix. Example: 0.0.1-initial

description - Text. Length must not exceed 100 characters

author - Text. Length must not exceed 32 characters

fileExtensions - Array of target file extensions e.g. ["jpg","png","gif"]

mimeTypes - Array of target mime types e.g. ["application/zip","application/vnd.peergos-todo","video/quicktime"]

fileTypes - Another way to target files e.g. [“image”, “video”, “audio”, “text”]

launchable - Indicates App can be opened on the Launcher page

folderAction - Indicates App acts on folders

appIcon - filename of image to use as icon on launcher page. Must be available in assets folder

permissions - see below

Permissions:

STORE_APP_DATA - Can store and read files in a folder private to the app

EDIT_CHOSEN_FILE – Can modify file chosen by user

READ_CHOSEN_FOLDER – Can read contents of folder chosen by user

EXCHANGE_MESSAGES_WITH_FRIENDS - Can exchange messages with friends

ACCESS_PROFILE_PHOTO - Can retrieve profile photos shared with you

CSP_UNSAFE_EVAL - Allow app to modify its own code via calls to eval()

A minimal peergos-app.json file would look like:

{

"displayName": "App",

"description": "does something",

"launchable": true

}

These are already quite powerful, but we plan to add more permissions as we see more use cases.

Peergos REST API

The following endpoints are available:

/peergos-api/v0/data/path.to.file – The data folder is where the App can store and retrieve files

/peergos-api/v0/form/path.to.file – An app can POST a HTML Form and have the results stored in a file of the same name in the data folder.

/peergos-api/v0/chat/ - An app can use the chat api for communication between friends.

If an App is launched from a file/folder context menu item, the path of the file/folder will be available via:

let url = new URL(window.location.href);

let filePath = url.searchParams.get("path");

Dark mode can be detected via the theme param

let theme = url.searchParams.get("theme");// curent values: ['dark-mode', '']

Drive - The following HTTP actions are supported:

Note: /peergos-api/v0/data/ is only relevant for the App's data folder. It is not necessary when referencing a file in the App's assets folder or the folder/file selected by the user.

GET – Retrieve a resource. Can be a file or folder

Response code: 200 – success.

404, 400 – request failed

Notes:

-

If resource is a folder the response will look like: {files:[“file1.txt”, “file2.txt”], subFolders:[“folder”]}

-

If the file is a media file with a thumbnail, provide ?preview=true to the request to have the thumbnail returned in the Response as a Base64 string.

POST – Create a resource

Response code: 201 – create success. See Response header field: location

200 for POST using form/

400 – request failed

PUT – update a resource

Response code: 201 – create success. See Response header field: location

200 – for update success

400 – request failed

DELETE – delete a file

204 – delete success

400 – request failed

PATCH – append to a file only supported

204 – Append success. See Response header field: Content-Location

400 – request failed

PUT|POST - save a file (launches a dialog)

/peergos-api/v0/save/filename.txt

Request body set to contents of file to save

Response code: 200 or 201 – success.

GET - launch folder picker

/peergos-api/v0/folders

Response code: 200 and an array of the selected paths.

GET - launch file picker

/peergos-api/v0/file-picker Optional url parameter ?extension="json" to filter files shown in picker.

Response code: 200 and an array containing the selected file path.

Chat V0 - The following HTTP actions are supported (see chat-api in example-apps):

See Chat V1 below for a more comprehensive API to support more complex apps

GET – Retrieve a list of all chats created by this App

/peergos-api/v0/chat/

Response code: 200 – success.

Response:

{chatId: chatId, title: title}

GET – Retrieve chat messages

/peergos-api/v0/chat/:chatId

Url Parameters:

from - paging from index

to - paging to index

Response code: 200 – success.

Response:

{messages:[], count: messagesRead}

Contents of messages array:

{type: 'Application', id: messageHash, text: text, author: author, timestamp: timestamp}

{type: 'Join', username: username, timestamp: timestamp}

POST - create chat

/peergos-api/v0/chat/

Request FormData

parameters:

maxInvites - Numeric

Response code: 201 – success.

Response header:

Location - chatId

PUT - send message

/peergos-api/v0/chat/:chatId

Request FormData

parameters:

text - Contents of message

Response code: 201 – success.

Chat V1 - The following HTTP actions are supported (see chat folder in example-apps):

GET – Retrieve chats for current App

/peergos-api/v1/chat/

Response code: 200 – success.

Response:

{chats: [], latestMessages: []}

Contents of chats array:

{chatId: string, title: string, members: [usernames], admins: [usernames] }

Contents of latestMessages array (array entries match corresponding chats array):

{message: string, creationTime: timestamp (localdatetime)}

GET – Retrieve chat messages

/peergos-api/v1/chat/:chatId

Url Parameters:

startIndex - message index

Response code: 200 – success.

Response:

{chatId: string, startIndex: url param from request, messages: array of message json, hasFriendsInChat: number of friends in current chat membership}

Where message is

{ messageRef: string uuid, author: username, timestamp: localdatetime,

type: can be one of RemoveMember|Invite|Join|GroupState|ReplyTo|Delete|Edit|Application ,

removeUsername: set if type is RemoveMember, inviteUsername: set if type is Invite, joinUsername: set if type is Join,

editPriorVersion: set if type is Edit, deleteTarget: set if type is Delete, replyToParent: set if type is ReplyTo,

text: set if type is Application|Edit|ReplyTo, envelope: base64 encoded opaque object,

groupState: set if type is GroupState, attachments : array of attachment json}

Where GroupState is

{ key: payload.key, value: payload.value}

Where attachment is

{fileRef: FileRef json, mimeType: mimeType of file, fileType: audio|video|image, thumbnail: base64 encoded thumbnail for file}

see FileRef description in API call for /attachment response)

DELETE - delete a chat

/peergos-api/v1/chat/:chatId

Response code: 204 – success.

DELETE - delete an attachment

/peergos-api/v1/chat/filePath

Response code: 204 – success.

POST - launch chat group membership modal in order to create a new chat

/peergos-api/v1/chat/

Response code: 201 – success. 400 - failure or modal closed

Response (location response header field):

{chatId: string, title: string, members: [username], admins: [username]};

POST - launch chat group membership modal in order to modify membership of existing chat

/peergos-api/v1/chat/:chatId

Response code: 200 – success. 400 - failure or modal closed

POST - launch gallery modal to display media attachment

/peergos-api/v1/chat/?view=true

Request body:

byte[] of FileRef json (see fileRef field in API call /attachment response)

Response code: 200

POST - download a media attachment

/peergos-api/v1/chat/?download=true

Request body:

byte[] of FileRef json (see fileRef field in API call /attachment response)

Response code: 200

POST - upload a media attachment

/peergos-api/v1/chat/attachment?filename=filename-of-file-to-upload

Request body:

byte[] of attachment's content

Response code: 201

Response (location response header field):

{fileRef: FileRef json, hasMediaFile: boolean, hasThumbnail: boolean, thumbnail: base64 string of thumbnail image,

fileType: file type string ie audio, image, video, mimeType: mimeType string, size: number }

where FileRef is

{path: absolute path to file, cap: opaque capability object, contentHash: hash of file contents}

PUT - send a message

/peergos-api/v1/chat/:chatId

Request body:

to create message

{ createMessage : { text: string, attachments: array of FileRef json} }

to edit existing message

{ editMessage : { text: string, messageRef: uuid of message to edit} }

to reply to an existing message

{ replyMessage : { text: string, attachments: array of FileRef json, replyTo: envelope of message to reply to} }

to delete an existing message

{ deleteMessage : { messageRef: uuid of message to delete} }

Response code: 201

Profile:

GET – Launch the profile modal for the requested Peergos user (must be friend of current user)

/peergos-api/v0/profile/:username

Response code: 200 – success. 400 - failure.

GET – Retrieve the profile thumbnail image for the requested Peergos user (must be friend of current user + app has permission ACCESS_PROFILE_PHOTO)

/peergos-api/v0/profile/:username?thumbnail=true

Response code: 200 – success. 400 - failure.

Response:

{profileThumbnail: base64 data}

Developing a Peergos App

Select the peergos-app.json file and choose ‘Run App’ to launch the app from the current directory. This is only available for launchable apps.

Tip: During development, set the launchable property to get the fast dev cycle feedback.

The install process will detect if an existing App has the same name. It will display the version of the already installed App.

During install the App's files are copied to an internal folder. Any existing contents in the assets folder will be replaced.

The contents of the data folder will be added to.

The previously installed peergos-app.json file is copied to the App’s data directory as ‘peergos-app-previous.json’.

Private Websites

You can turn any folder in Peergos into a private website, benefitting from the built-in access control and privacy. Such websites can be viewed using a built-in browser app. This browser isolates websites from different owners using different sub domains just as different apps are themselves isolated. Such websites are locked down such that external communication is impossible [0]. This means that 3rd party tracking is impossible. The beautiful thing here is that 1st party tracking is also not easy (and can be made impossible) because the paths are resolved locally in the browser and all requests go through the peergos server you are connecting to. This means browser fingerprinting is irrelevant for such websites because no information can be exfiltrated!

You can share websites privately with friends on peergos the same way you share any file or folder. You can even share them with anyone via a secret link!

A private website can also link to any other websites in Peergos, including those owned by others, by the human readable path in the Peergos global filesystem. Following such a link will only work if you also have read access to the destination.

The possibilities are huge here for a better, more private web that protects people from surveillance. You can also edit your website's directly in Peergos. It has never been easier to host your own website securely!

[0] This is currently not true until browsers implement the webrtc CSP which allows us to block any webrtc connections which can be used to exfiltrate data.

Peergos architecture

Logical

The logical architecture of Peergos consists of the following:

- Content addressed storage: a data store with a mapping from the hash of a block of data to the data itself

- Mutable pointers: a mapping from a public key to a hash

- PKI: a global append only log for the username <==> {identity public key, storage public key} mappings

- Social: each user designates a server for sending follow requests for users to (the server can't see the source user). This is the same as the storage server for that user and is identified and contacted via its public key.

Physical

Each user must have at least one Peergos server (which includes an instance of IPFS). This server stores their data, their mutable pointers and any pending follow requests for them. There is also the global append only log for the PKI which is mirrored on every node. Communication between IPFS instances is done over encrypted TLS 1.3 streams.

Immutable data

The immutable data store is provided by IPFS and allows anyone authorised to retrieve cipher text from its hash through any Peergos node. Note that IPFS is used in a fully trustless manner. Every hash and signature is checked client side during reads and writes. The underlying storage can be provided by the local harddisk or any S3 compatible object storage without loss of privacy. Access to raw blocks is controlled using S3 V4 signatures from an allowed Block Access Token (BAT). Each block specifies which BATs are allowed to retrieve it. Any node that retrieves such a block enforces the same auth on it.

The interface for this storage is ContentAddressedStorage, with the following methods:

/**

*

* @return The identity (hash of the public key) of the storage node we are talking to

*/

CompletableFuture<Cid> id();

/**

*

* @param owner

* @return A new transaction id that can be used to group writes together and protect them from being garbage

* collected before they have been pinned.

*/

CompletableFuture<TransactionId> startTransaction(PublicKeyHash owner);

/**

* Release all associated objects from this transaction to allow them to be garbage collected if they haven't been

* pinned.

* @param owner

* @param tid

* @return

*/

CompletableFuture<Boolean> closeTransaction(PublicKeyHash owner, TransactionId tid);

/**

*

* @param owner The owner of these blocks of data

* @param writer The public signing key authorizing these writes, which must be owned by the owner key

* @param signedHashes The signatures of the sha256 of each block being written (by the writer)

* @param blocks The blocks to write

* @param tid The transaction to group these writes under

* @return

*/

CompletableFuture<List<Cid>> put(PublicKeyHash owner,

PublicKeyHash writer,

List<byte[]> signedHashes,

List<byte[]> blocks,

TransactionId tid);

/**

*

* @param hash

* @return The data with the requested hash, deserialized into cbor, or Optional.empty() if no object can be found

*/

CompletableFuture<Optional<CborObject>> get(Cid hash, Optional<BatWithId> bat);

/**

* Write a block of data that is just raw bytes, not ipld structured cbor

* @param owner

* @param writer

* @param signedHashes

* @param blocks

* @param tid

* @param progressCounter

* @return

*/

CompletableFuture<List<Cid>> putRaw(PublicKeyHash owner,

PublicKeyHash writer,

List<byte[]> signedHashes,

List<byte[]> blocks,

TransactionId tid,

ProgressConsumer<Long> progressCounter);

/**

* Get a block of data that is not in ipld cbor format, just raw bytes

* @param hash

* @return

*/

CompletableFuture<Optional<byte[]>> getRaw(Cid hash, Optional<BatWithId> bat);

CompletableFuture<List<byte[]>> getChampLookup(PublicKeyHash owner, Cid root, byte[] champKey, Optional<BatWithId> bat);

Mutable

Mutable pointers in Peergos are just a mapping from a public key to a root hash. Clearly, being mutable, they need some kind of synchronization or concurrent data structure. Each user lists an ipfs node id (the hash of its public key) which is responsible for synchronising their writes and publishing the latest root hashes. This means the global filesystem is sharded by username and each user can use an ipfs instance (or cluster) with sufficient capability for their bandwidth requirements.

Initially each user's file system is under a single public key. Additional keys are generated when granting write access.

The interface for MutablePointers has the following methods:

/** Update the hash that a public key maps to (doing a cas with the existing value)

*

* @param owner The owner of this signing key

* @param writer The public signing key

* @param writerSignedBtreeRootHash the signed serialization of the HashCasPair

* @return True when sucessfully completed

*/

CompletableFuture<Boolean> setPointer(PublicKeyHash owner, PublicKeyHash writer, byte[] writerSignedBtreeRootHash);

/** Get the current hash a public key maps to

*

* @param writer The public signing key

* @return The signed cas of the pointer from its previous value to its current value

*/

CompletableFuture<Optional<byte[]>> getPointer(PublicKeyHash owner, PublicKeyHash writer);

Each signed update is actually a pair of hashes (previous, current) and a monotonically increasing sequence number. This means the server can reject invalid updates, and that the updates form a total order. The ordering means clients can cache the most recent version of a pointer to defend against being served stale older versions.

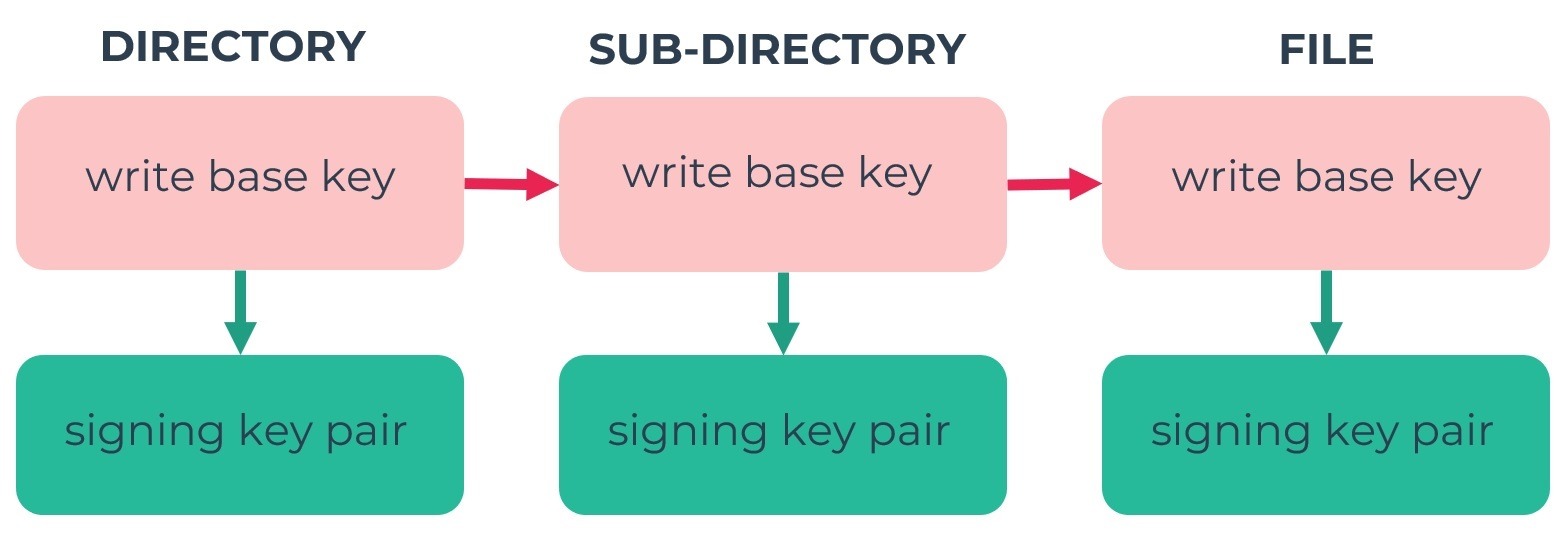

Writing subspaces

Each user has a randomly generated writing key pair which controls writes to their filesystem. They can create new writing key pairs for any subtree, for example when granting write access to a file or folder. If desired, a given writing key can be quota controlled, to prevent users to which you've granted write access to a file from filling your data store.

Every signing keypair, including your identity keypair, and your root writing keypair, map to a data structure called WriterData. A WriterData can contain merkle links to roots of merkle champs and various public keys. The full data structure is listed below. If any of the properties are empty they do not contribute to the size of the serialized WriterData.

// the public signing key controlling this subspace

PublicKeyHash controller;

// publicly readable and present on owner keys

Optional<SecretGenerationAlgorithm> generationAlgorithm;

// This is the root of a champ containing publicly shared files and folders (a lookup from path to capability)

Optional<Multihash> publicData;

// The public encryption key to encrypt follow requests to

Optional<PublicKeyHash> followRequestReceiver;

// Any keys directly owned by the controller, that aren't named

Set<PublicKeyHash> ownedKeys;

// Any keys directly owned by the controller that have specific labels

// only used for the PKI

Map<String, PublicKeyHash> namedOwnedKeys;

// This is the root of a champ containing the controller's filesystem (present on writer keys)

Optional<Multihash> tree;

Merkle-CHAMP

The main network visible data structure in Peergos is a merkle compressed hash array mapped trie, or merkle-champ. This data structure is explained in the next section. All the data under a given writing keypair has its own merkle-champ. This is just a mapping from random 32 byte labels to cipher-text blobs. These blobs are cryptree nodes containing the cryptree data structure, and, in the case of a file section, up to 5 merkle links to encrypted file fragments (Max 1 MiB each). A merkle-link is just a hash that references another ipfs object. Each 5 MiB section of a file is stored under a different random label in the champ, and similarly with large directories.

Usernames

The public keys and usernames are stored in a global append only data structure, with names taken on a first come first served basis. This needs consensus to ensure uniqueness of usernames. This is also where the ipfs node id of the server(s) responsible for synchronising the user's writes is stored. The public key infrastructure (pki) server is called the Corenode, and its interface is the following.

/**

*

* @param username

* @return the key chain proving the claim of the requested username and the ipfs node id of their storage

*/

CompletableFuture<List<UserPublicKeyLink>> getChain(String username);

/** Claim a username, or change the public key owning a username

*

* @param username

* @param chain The changed links of the chain

* @return True if successfully updated

*/

CompletableFuture<Boolean> updateChain(String username, List<UserPublicKeyLink> chain);

/**

*

* @param key the hash of the public identity key of a user

* @return the username claimed by a given public key

*/

CompletableFuture<String> getUsername(PublicKeyHash key);

/**

*

* @param prefix

* @return All usernames starting with prefix

*/

CompletableFuture<List<String>> getUsernames(String prefix);

Follow requests

A user's storage server stores their pending follow requests until they are retrieved and deleted. These are not actually stored in ipfs itself, and reading them is guarded by a challenge protocol to mitigate against someone logging them alll now and decrypting them with a large quantum computer when one is built.

Follow requests contain no unencrypted data visible to the network, or server, apart from the target user. Only the target user can decrypt the follow request to see the sender.

The interface for sending, receiving and removing follow requests is called SocialNetwork and has the following methods:

/** Send a follow request to the target public key

*

* @param target The public identity key hash of the target user

* @param encryptedPermission The encrypted follow request

* @return True if successful

*/

CompletableFuture<Boolean> sendFollowRequest(PublicKeyHash target, byte[] encryptedPermission);

/**

*

* @param owner The public identity key hash of user who's pending follow requests are being retrieved

* @param signedTime The current time signed by the owner

* @return all the pending follow requests for the given user

*/

CompletableFuture<byte[]> getFollowRequests(PublicKeyHash owner, byte[] signedTime);

/** Delete a follow request for a given public key

*

* @param owner The public identity key hash of user who's follow request is being deleted

* @param data The original follow request data to delete, signed by the owner

* @return True if successful

*/

CompletableFuture<Boolean> removeFollowRequest(PublicKeyHash owner, byte[] data);

Specification

The wire protocol is standard libp2p. This is specified here.

The serialization format for blocks is dag-cbor, or raw (unformatted).

Merkle links are encoded as Cids, whose specification is here.

Public signing keys are encoded in cbor, with format specified here.

Public encryption keys are encoded in cbor, with format specified here.

The cryptree+ structure is specified here.

The server API is specified here

The Compressed Hash-Array Mapped Prefix-Tree (CHAMP) structure is specified and implemented here.

The local (service worker based) REST API for applications is described here.

Security

Peergos' primary focus is security.

Trust free levels

Peergos is designed at every level to minimise or remove entirely any need for trust.

The first trust barrier is between Peergos and IPFS. A Peergos server verifies the hash of everything written to or read from IPFS. This removes the possibility of tampering at the ipfs level.

The second trust free barrier is between a Peergos client and a Peergos server. A Peergos client verifies the hash of every block read from or written to a Peergos server. Peergos clients also verify the signature of every signed piece of data received from Peergos. This means that once you have obtained a trustworthy copy of a Peergos client you do not need to trust a server to interact with it.

Threat models

Peergos supports several threat models depending on the user and their situation.

Casual user:

- Trusts the SSL certificate hierarchy and the domain name system

- Is happy to run Javascript in their browser

- Trusts TLS and their browser (and OS and CPU ;-) )

Such a user can interact with peergos purely through a public web server that they trust over TLS.

Slightly paranoid user:

- Doesn't trust DNS or SSL certificates

- Is happy to run Javascript served from localhost in their browser

This class of user can download and run the Peergos application and access the web interface through their browser over localhost.

More paranoid user:

- Doesn't not trust the SSL certificate system

- Doesn't trust DNS

- Doesn't trust javascript

This class of user can download the Peergos application (or otherwise obtain a signed copy), or build it from source. They can then run Peergos locally and use the native user interface, either the comand line or a FUSE mount. Once they have obtained or built a copy they trust, then they need trust only the integrity of TweetNacl cryptography (or our post-quantum upgrade) and the Tor architecture.

Login

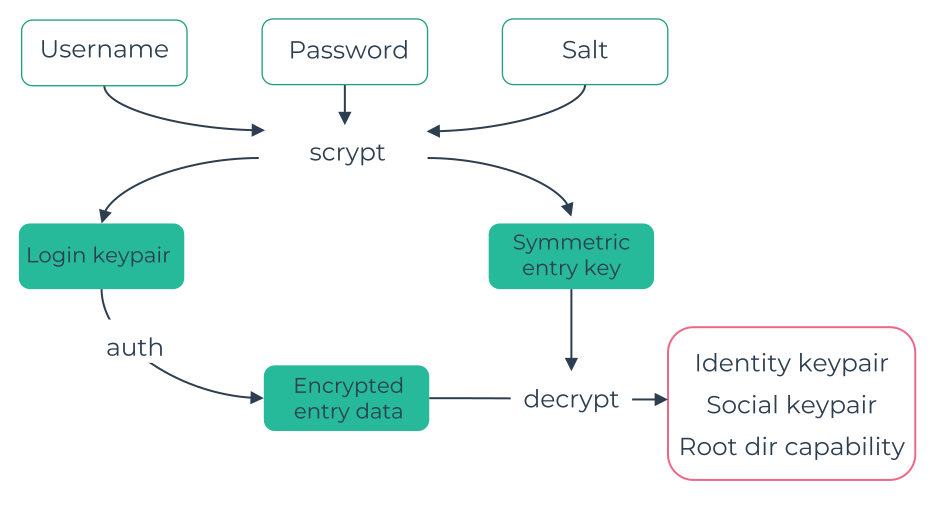

To login your password + username + public salt are hashed using scrypt hashing function (with parameters 17, 8, 1, 64). The output of this is your login keypair and a symmetric key. The login keypair is used to auth with your home server and retrieve your encrypted login data, which is decrypted using the aforementioned symmetric key. Alternatively client can cache the encrypted login data to allow offline login. The login data contains your identity keypair, social keypair, and the root capability to your filesystem. The public salt and encrypted login data are stored on your home server, and any mirror your have authorised.

Encryption

All your files are encrypted symmetrically with a random 256-bit key using salsa20+poly1305 (from TweetNaCl). These keys are not derived from the contents of the file (as some services do) because this leaks to the network which files you are storing. Files are split into chunks of up to 5 MiB, padded to a multiple of 4 KiB, and each chunk is independently encrypted. Different chunks of a file are not linkable unless you have a read capability for that file.

Block Access Control

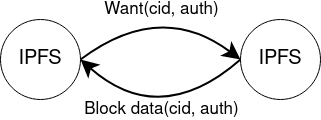

When an app on IPFS wants a block of data, it asks IPFS for the data corresponding to its content identifier, or CID (basically, a hash of the data). IPFS will then search the global IPFS network for nodes that have this CID. At the same time, it will ask any nodes it's already in contact with: "Do you have this CID?". Any contacted node that has the block can respond with the data. A nice property of this is that any node that has the content can serve it up, which means that it autoscales to demand.

Authed bitswap retrieving a block.

We have extended this protocol to have an optional auth string paired with every CID. In Peergos, this auth string is an S3 V4 signature, which is time-limited, includes the CID, and is tied to the requesting node's public key (to prevent replay attacks). A replay attack would be if it were possible for someone without the block, who we had sent a valid auth token, to retrieve the block themselves directly using the token. As an anology, consider a ticketed event. If someone buys a ticket, and then a friend of theirs copies the ticket and uses that to gain entry, that is a replay attack. If, however, the tickets included the buyer's name on the ticket (they were non-transferable) and the event verified the holder's name on entry then the friend couldn't get in, even with the original ticket.

We do a similar thing to avoid this by using the source node's public key as the domain in the S3 request. This way we can broadcast a cid and auth string to the network and no one but us can use that auth string. The S3 V4 signature scheme is essentially repeated hmac-sha256 and needs a secret key to function. Such a secret key would grant the holder access to the block, so we call it a Block Access Token or BAT for short, and each is 32 bytes long. Since it only depends on hmac-sha256, which itself only depends on sha256, it is post-quantum - a large quantum computer does not break it.

The primary BAT used for this authentication is derived from the block itself. This means any instance that retrieves such a block (after being authorised) can continue to serve it up and enforce the same access control, thus maintaining the autoscaling properties in a privacy-preserving way.

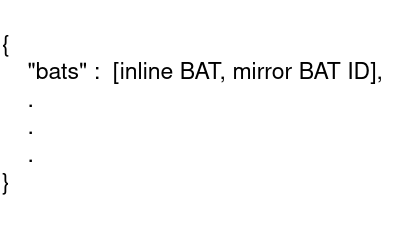

There are two formats of blocks in Peergos, cbor and raw. Raw blocks are the most sensitive (they hold users' encrypted data) and are just fragments of ciphertext with no additional structure. Cbor blocks are valid dag-cbor structured IPLD objects which can reference other blocks. How could we put a BAT in these blocks? In a cbor block, it is easy to choose a canonical place to put a list of BATs. If the cbor is a map object, we put a list of BATs at the top level under the key "bats".

Structure for storing BATs in cbor blocks

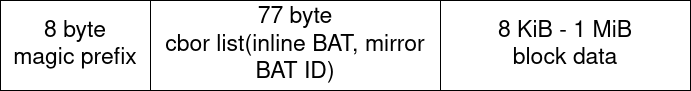

For raw objects, it is a little more difficult, as we also need to support raw blocks that do not have a BAT (either legacy blocks or ones specifically made public). Our design uses a detectable prefix of 8 FIXED bytes followed by a cbor list of BATs before the actual ciphertext of the block.

Structure for storing BATs in the prefix of a raw block

We normally have two bats per block. One is inline - and specific to that block only. The other is a user wide "mirror" BAT - and referenced in the block by its hash. The mirror BAT is for when a user wants to mirror all their data on another instance, or migrate to another instance.

| Chunk 1 | Chunk 2 | Chunk 3 | |

|---|---|---|---|

| BAT stream secret | Sb (encrypted in base data) | ||

| BAT[] (unencrypted in root cbor object under "bats") | B1=randomBytes(32) | B2=hash(Sb + B1) | B3=hash(Sb + B2) |

Each 5 MiB chunk of a file or directory has its own unique BAT, so the server still cannot link the different blocks of a file to deduce the padded size of the file. Subsequent chunk BATs within a file are derived in the same way as we do the CHAMP labels, by hashing the current chunk BAT with a stream-secret, stored encrypted in the first chunk. This maintains our ability to seek within arbitrarily large files without any IO operations (just local hashing and then a final lookup of the requested chunk). When someone's access to a file or directory is revoked, the BATs are also changed, making it impossible to retrieve the new ciphertext even with previous access.

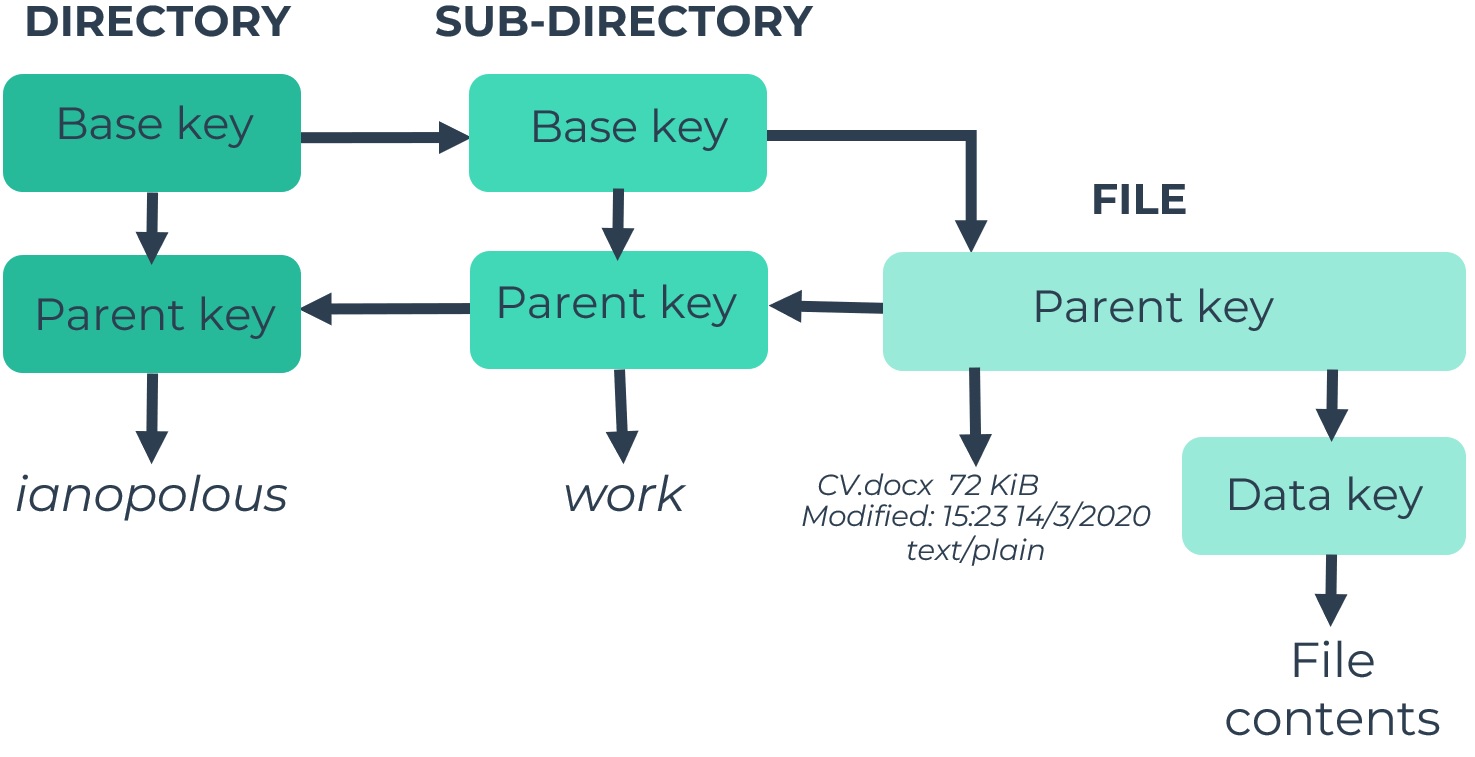

Access control

Read access to your files is controlled by a data structure called cryptree, which is essentially a tree of symmetric keys, where the holder of one key can decrypt all the descendant keys. The result is extremely fine grained access control. You can grant access to someone to a file and that user won't be able to see any of the sibling files in the same folder (or even their names - or even their labels in the champ). Granting read access to a folder implies granting read access to all the contents of the folder recursively.

Write access is independently controlled by a similar, but simpler cryptree. All updates to a given subtree are signed by a corresponding writing key pair. When you grant write access to a file or folder then that item is moved to a new writing key pair, to keep the fine grained access control applicable to write access too. This operates independently of the read access control cryptree.

Capabilities

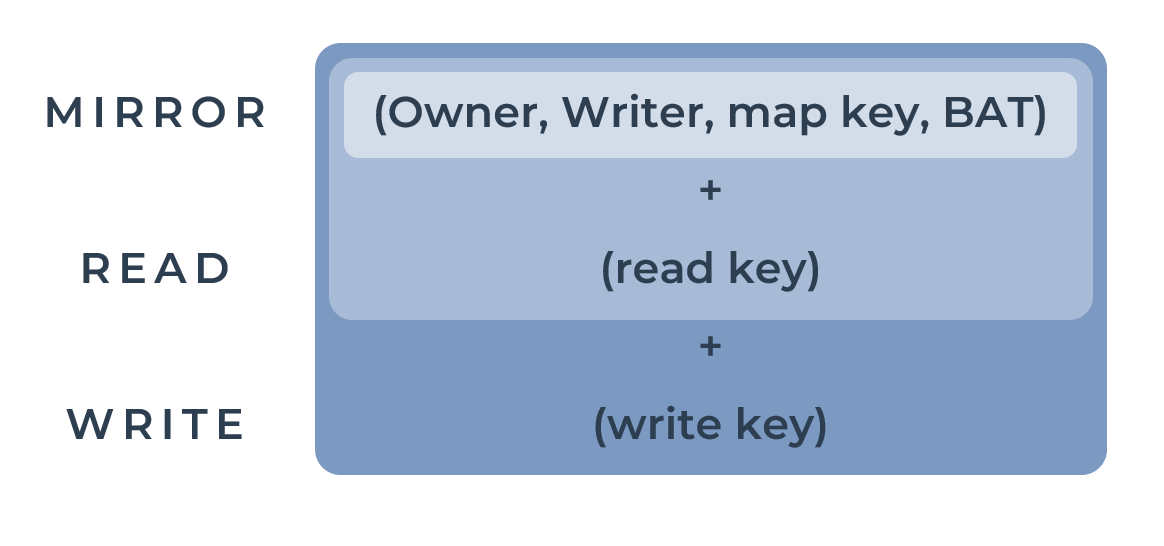

There are three kinds of capabilities in Peergos: Mirror, Read and Write.

The owner is a public key, which is used to lookup the host public key from the PKI. The writer is a public key for the mutable pointer. The map key is 32 bytes which form the lookup key in the champ, the BAT is 32 bytes which are used to control access to the ciphertext by the server. The read and write keys are 256 bit symmetric keys.

With a mirror capability you are able to retrieve the raw ciphertext of a chunk. The read capability allows you to decrypt and read the chunk, and the write key allows you to modify the file/directory.

Metadata

All of the metadata for a given file is encrypted, with a different symmetric key from the file itself. This includes the name for directories and also the filesize, modification time, any thumbnail and mime type for files. The size of files is further hidden by splitting files into 5MiB chunks, which are padded to a multiple of 4 KiB, and storing each chunk under a random label (along with those for all other files owned by the same user and controlled by the same writing key pair).

The metadata around access patterns will be hidden by hosting files behind a tor hidden service once Tor is integrated. This will ensure that when one user reads a file shared with them by a friend this access does not leak to the network the fact that they are friends.

Quantum resistance

Peergos aims to be a long term secure file storage system, and hence we have architected it with an awareness of quantum computer based attacks (many of us are ex physicists).

Files that you store but don't share with anyone are already resistant to quantum computer based attacks. This is because the process from logging in to decrypting them only involves hashing and symmetric encryption, neither of which are significantly weakened by a quantum computer.

Files that have been shared are currently vulnerable to a quantum computer attack because they use asymmetric elliptic curve cryptography (Curve25519) to share the decryption capability. However, we plan to upgade to a suitable post-quantum algorithm soon.

Social graph

Following a user is implemented by them sharing read access to a directory in their filesystem. The read capability is sent encrypted from a random single use keypair to the target user's public key. These requests will be sent over Tor to that user's hidden service to hide the metadata from the network. Once retrieved, the receiving user stores the capability in their own storage, symmetrically encrypted and deletes the follow request from their server.

Public Key Infrastructure

All users have a public identity key, and these are stored in a merkle-champ. This structure is mirrored by all nodes (or you can delegate to a mirror you trust). This includes the user's claim to a username along with an expiry, and their current storage server's public key. The effect is similar to certificate or key transparency logs. In contrast though, key transparency logs are not normally used as the source of truth, but only checked retrospectively, occasionally for some users. So this gives much stronger guarantees whilst maintaining the append-only nature. Mirrors reject updates that are not append-only, so the pki cannot tamper with the mappings.

This allows users to do public key lookups without leaking to the network who they are looking up. Users also store the keys of their friends in their own filesystem in a TOFU setup, which also rejects invalid updates. This means that ordinary usage doesn't involve looking up keys from the public pki servers.

How does it work?

This section goes into technical detail about how different operations work in Peerogs.

Signing up

The steps involved in signing up are:

-

Register the username

- Hash the password and username through scrypt to get the auth key pair, and symmetric root key.

- Generate a random identity keypair

- Generate a signed username claim including an expiry, and the ipfs node id of the storage server (the server we are signing up through) This is just identity.sign(username, expiry, [storage id])

- Send this claim to the pki node for confirmation

-

Set up your identity

- Write the public identity key to ipfs

- Write the public following key to ipfs

- Create a WriterData for the identity key pair with the two resulting public key hashes

- Generate a random key pair to control writes to the users filesystem. Add this key pair as an owned key to the identity WriterData.

- Commit the identity WriterData (write it to ipfs and set the mutable pointer for the identity key pair to the resulting hash).

-

Set up your filesystem

- Create a DirAccess cryptree node for the user's root directory, and add this to the champ of the filesystem key pair.

- Add a write capability to your root dir to your login data (encrypted with the symmetric root key, and only retrievable with the auth key pair)

- Create the /username/shared directory which is used when sending follow requests

Uploading a file

A file upload proceeds in the following steps

-

Check filename is valid and free

-

Create a transaction file with a plan for the upload

-

Generate a stream secret for the file (32 random bytes) which is stored in the encrypted file metadata

-

For every section of the file which is up to 5 MiB:

- Pad plaintext to a multiple of 4096 bytes.

- Encrypt the padded 5 MiB file section with a random symmetric key

- Split the cipher text into 1 MiB fragments

- Create a FileAccess cryptree node with merkle links to all the resulting fragments

- Add the FileAccess to the champ of the writing key pair (under a random 32 byte label for initial chunks, or sha256(stream secret + previous label))

-

Add a cryptree link from the parent directory to the file

-

Delete the transaction file

A modification, such as uploading a file, can be done through any Peergos server as the writes are proxied through an ipfs p2p stream to the owner's storage ipfs node.

Sending a follow request

Sending a follow request proceeds in the following steps:

-

Look up the target friend's public following key

-

Create a directory /our-name/shared/friend-name

-

Encrypt a read capability for that directory using a random key pair to the target's following key. Using a random keypair ensures that noone but the target friend can see who sent the request.

-

Send the follow request to the storage server of the target friend

The target can then either allow and reciprocate (full bi-directional friendship), allow (you are following them), reciprocate (they are following you) or deny. If they have reciprocated then you can grant read or write access to any file or folder by adding a read or write capability in their directory in your space.

When you receive a follow request and either allow or reciprocate it then you add the capability in the request to a file in your home dir (/you-username/.from-friends.cborstream), before deleting the follow request from your server.

Proxying requests

Any modifying request needs to be proxied to the correct destination server. This could be signing up, uploading a file, or sending a follow request. This is achieved using an ipfs p2p stream. In particular, because all these requests are http requests, we use the http p2p proxy exposed locally on the ipfs gateway. It means we can send any request to

http://localhost:8080/p2p/$target_node_id/http/$path

and it will go through an end to end encrypted stream through the ipfs network to the destination node, which then sends it to the local Peergos server at:

http://localhost:8000/$path

This is illustrated below: